Artificial intelligence refers to the process of creating machines, particularly computer systems, that are capable of simulating human intelligence. AI encloses various applications such as expert systems, natural language processing, speech recognition, and machine vision.

Here’s an example of Artificial Intelligence:

| Examples of Artificial Intelligence Applications | AI technology enables computers to understand, interpret, and generate human language. |

|---|---|

| Natural Language Processing | Google Translate, Amazon Alexa, Siri |

| Computer Vision | Face recognition technology, autonomous vehicles, object detection in security cameras |

| Robotics | Industrial robots, surgical robots, drones |

| Expert Systems | Medical diagnosis systems, legal advice systems |

| Machine Learning | Fraud detection systems, recommendation engines, image and speech recognition |

| Virtual Assistants | Apple Siri, Google Assistant, Amazon Alexa |

How Artificial Intelligence Works?

As AI’s excitement has grown, vendors have rushed to showcase how their products and services utilize it. However, they often refer to AI as just a tiny part of the technology, such as machine learning.

For AI to function correctly, it requires a specialized hardware and software foundation to write and train machine learning algorithms.

No programming language is synonymous with AI, but Python, R, Java, C++, and Julia have become popular choices among AI developers.

Typically, AI systems function by taking in large amounts of labeled training data, analyzing the data for patterns and correlations, and using these patterns to predict future outcomes. This allows a chatbot, for example, to learn from text examples and generate realistic conversations with people or an image recognition tool to identify and describe objects in images by reviewing millions of illustrations. New and rapidly improving generative AI techniques can create lifelike text, images, music, and other media.

When it comes to programming for AI, the focus is on developing cognitive skills, which can include the following:

Importance of Artificial Intelligence

AI can revolutionize our lives, work, and leisure activities. The technology has been effectively leveraged in business to automate tasks traditionally performed by humans, including customer service, fraud detection, quality control, and lead generation.

AI can outperform human capabilities in various domains, especially in detail-oriented and repetitive tasks such as analyzing extensive legal documents for accurate data entry. AI tools complete these jobs swiftly and with minimal errors. Thanks to its ability to process massive datasets, AI can also provide organizations with insights into their operations they may not have previously known.

The burgeoning array of generative AI tools will be critical in diverse areas such as education, marketing, and product design.

Here is why Artificial Intelligence is that much Important:

AI has become a crucial part of the operations of some of the world’s largest and most successful companies. Some of these companies include Alphabet, Apple, Microsoft, and Meta, which utilize AI technologies to enhance their performance and gain an edge over their rivals. One example of AI use is in Alphabet’s subsidiary, Google. AI is central to Google’s search engine, Waymo’s self-driving cars, and Google Brain, responsible for developing the transformer neural network architecture that enables breakthroughs in natural language processing.

Advantages and Disadvantages of Artificial Intelligence

Artificial intelligence (AI) is becoming more advanced because it can analyze data faster and more accurately than humans. This is mainly due to artificial neural networks and deep learning AI technologies.

Daily, we create a lot of data, which would be very difficult for a human researcher to process. However, AI applications that use machine learning can quickly analyze this data and turn it into useful information.

Here we will discuss the advantages and disadvantages of Artificial intelligence.

Advantages of Artificial Intelligence:

- AI reduces human errors in decision-making and can improve accuracy.

Example: Weather forecasting using AI has reduced human errors. - AI can take on risky tasks that are dangerous for humans.

Example: AI robots can be used to explore space or defuse bombs. - Machines with AI can work 24/7 without breaks or getting bored.

Example: AI can handle queries and issues in educational institutions and helplines. - AI can automate repetitive tasks, freeing up humans for more creative work.

Example: AI can be used to speed up document verification in banks. - Digital assistants and chatbots powered by AI can provide customer service and interact with users.

Example: Organizations use chatbots to clarify customer doubts. - AI can make decisions and carry out actions faster than humans.

Model: The AI behind chess games makes quick decisions. - AI is used in daily applications like Siri, Cortana, and OK Google.

Example: We use AI-powered digital assistants to make phone calls, take selfies, and find locations. - AI is used in inventions across domains to solve complex problems.

Example: AI is used to predict breast cancer at earlier stages. - AI has demonstrated its ability to diagnose specific types of cancers, such as breast cancer and melanoma, as well as or even better than human doctors.

Disadvantages of Artificial Intelligence:

Like everything has positive and negative sides, Artificial Intelligence (AI) has some drawbacks.

Here are some of them.

- Creating and maintaining AI technology is expensive.

- AI can make people lazy by automating tasks.

- AI may lead to unemployment as machines replace human workers.

- Machines cannot form emotional bonds with humans, which is essential in team management.

- Machines can only do what they’re programmed to do and may not be able to handle new or unexpected tasks.

Do You Know About Weak AI and Strong AI?

There are two main types of AI – weak AI (also called narrow AI) and strong AI (also known as artificial general intelligence or AGI). Weak AI is designed to perform specific tasks and is commonly used in industrial robots and virtual personal assistants like Siri. Strong AI, on the other hand, can replicate the cognitive abilities of the human brain and can use fuzzy logic to find solutions to unfamiliar tasks. It can pass both the Turing test and the Chinese Room argument.

What Are The 4 Types of Artificial Intelligence?

There are typically three types of artificial intelligence (AI), not four. However, some experts might classify AI into four types, which are:

- Reactive Machines – These AI systems can only react to the current situation based on their received data. They cannot form memories or use past experiences to inform future decisions.

- Limited Memory – These AI systems can use past experiences to inform future decisions but only have a limited memory capacity.

- Theory of Mind – These AI systems can understand other entities’ emotions and mental states and use that information to make more informed decisions.

- Self-Aware AI – This is the hypothetical type of AI that would fully understand its existence and consciousness. It is currently only a theoretical concept, and researchers are still working to develop it.

Here is an example of 4 types of Artificial intelligence:

| Type of AI | Example |

|---|---|

| Reactive Machines | Chess-playing AI, voice assistants like Siri |

| Limited Memory | Self-driving cars, fraud detection AI |

| Theory of Mind | Social robots, chatbots that recognize emotions |

| Self-Aware AI | Some recently introduced self-aware robots and more work is going on. |

How Is AI Technology Used Today?

AI is a rapidly growing field being integrated into various types of technology. Here are seven examples of how AI is being used in today’s different industries:

- Automation: AI-powered automation tools can help to expand the volume and types of tasks performed. For instance, Robotic Process Automation (RPA) is a type of software that automates repetitive data processing tasks, which humans traditionally did. With the help of machine learning and emerging AI tools, RPA can automate more significant portions of enterprise jobs, enabling tactical bots to pass along intelligence from AI and respond to process changes.

- Machine Learning: This is the science of teaching computers to learn and act without explicit programming. Deep learning, a subset of machine learning, can be considered the automation of predictive analytics. There are three types of machine learning algorithms:

- Supervised learning: Data sets are labeled so that patterns can be detected and used to mark new data sets.

- Unsupervised learning: Data sets aren’t labeled and are sorted according to similarities or differences.

- Reinforcement learning: Data sets aren’t labeled, but the AI system is given feedback after performing an action or several actions.

- Machine Vision: This technology allows machines to see, capture, and analyze visual information using a camera, analog-to-digital conversion, and digital signal processing. It is often compared to human eyesight, but machine vision isn’t bound by biology and can be programmed to see through walls, for example. It is used in various applications, from signature identification to medical image analysis. Computer vision, focused on machine-based image processing, is often conflated with machine vision.

- Natural Language Processing (NLP): This is a computer program’s processing of human language. NLP tasks include text translation, sentiment analysis, and speech recognition. Current approaches to NLP are based on machine learning. One of the best-known examples of NLP is spam detection, which looks at an email’s subject line and text and decides if it’s junk.

- Robotics: This engineering field focuses on designing and manufacturing robots that can perform tasks difficult for humans to perform or consistently perform. Robots are used in car production assembly lines or by NASA to move large objects in space. Researchers also use machine learning to build robots that interact in social settings.

- Self-driving Cars: Autonomous vehicles use a combination of computer vision, image recognition, and deep learning to build automated skills to pilot a car while staying in a given lane and avoiding unexpected obstructions, such as pedestrians.

- Text, Image, and Audio Generation: Generative AI techniques, which create various types of media from text prompts, are being applied extensively across businesses to create a seemingly limitless range of content types, from photorealistic art to email responses and screenplays.

- AI is revolutionizing software coding and IT processes: Though new generative AI tools can produce application code based on natural language prompts, they are still in the early stages of development and are not likely to replace software engineers anytime soon. Additionally, AI is currently being implemented to automate many IT processes, such as data entry, fraud detection, customer service, and predictive maintenance and security.

- Regarding security: AI and machine learning are the go-to buzzwords used by vendors to promote their products, but buyers should be cautious. However, AI techniques are proving effective in various aspects of cybersecurity, including anomaly detection, solving the false-positive problem, and conducting behavioral threat analytics. Organizations utilize machine learning in security information and event management (SIEM) software and other relevant areas to detect anomalies and identify suspicious activities that may indicate a threat. By analyzing data and using logic to identify similarities to known malicious code, AI can see new and emerging attacks much faster than humans and previous iterations of technology.

- The manufacturing industry has been leading the way in integrating robots into their production processes. Initially, industrial robots were programmed to perform a single task and operated separately from human workers. However, now they are evolving to become cobots – smaller, multitasking robots that work collaboratively with humans and can take on more responsibilities in various workspaces such as warehouses and factory floors.

- The banking industry is effectively utilizing AI in various ways. Chatbots are being used to inform customers about their services and offerings and to process transactions that don’t require human intervention. AI virtual assistants are also employed to reduce compliance costs with banking regulations. Moreover, banking organizations use AI to enhance their decision-making capabilities for loans, set credit limits and identify profitable investment opportunities.

- AI is becoming essential to transportation technology: It helps operate self-driving vehicles, manages traffic flow, predicts flight delays, and ensures safer and more efficient ocean shipping. In supply chains, AI is replacing old methods of forecasting demand and predicting disruptions. This became more necessary during the COVID-19 pandemic when companies faced unexpected changes in supply and demand for goods.

Augmented intelligence vs. Artificial intelligence

According to some experts in the industry, the term “artificial intelligence” is too closely associated with science fiction and movies. This has led to unrealistic expectations about AI’s impact on our lives and jobs. They propose using a new term called “augmented intelligence” to distinguish between autonomous AI systems like those portrayed in movies such as “Hal 9000” and “The Terminator” and AI tools that work alongside humans to support and enhance their abilities.

| Aspect | Augmented Intelligence | Artificial Intelligence |

|---|---|---|

| Definition | AI systems that enhance human capabilities and decision-making | AI systems that can perform tasks without human intervention |

| Function | Support and amplify human intelligence | Replace human intelligence |

| Example | Virtual assistants, chatbots, recommendation systems | Autonomous vehicles, deep learning, neural networks |

| Goal | Improve efficiency and accuracy of human decision-making | Perform tasks faster and more efficiently than humans |

| Limitations | Requires human input and decision-making | Limited by their programming and data inputs |

| Ethics | Augments human decision-making and preserves human control | Can raise ethical concerns about replacing human jobs and decision-making |

| Adoption | Widely adopted in various industries, such as healthcare and finance | Still in the early stages of adoption in some industries |

It is important to note that augmented and artificial intelligence are not necessarily opposing concepts but somewhat different approaches to designing AI systems that address different needs and use cases.

Ethical Challenges of AI

The use of AI in business presents a new set of possibilities, but it also raises ethical questions. One issue is the potential for bias in AI systems because machine learning algorithms only learn from the given data. Bias can be introduced if the data selected for training the AI program is limited or flawed. This is a particular concern when using deep learning and generative adversarial network (GAN) applications, which are often inherently unexplainable.

Explainability is another challenge to using AI, especially in industries with strict regulatory compliance requirements. For example, financial institutions are required to explain credit-issuing decisions. However, describing how the decision was made can be challenging when AI programming is used to make decisions. This is because AI tools operate by teasing out subtle correlations between thousands of variables, which can make the decision-making process a black box.

Other ethical challenges of AI include misuses, such as deep fakes and phishing, legal concerns like AI libel and copyright issues, job displacement, and data privacy concerns in banking, healthcare, and law. It is essential for organizations to factor in ethics when training their AI programs and to strive to avoid bias.

The Challenges of Regulating AI

Despite the potential risks associated with AI, few regulations currently govern the use of AI tools. Laws that do exist tend to pertain to AI indirectly and, in some cases, limit the use of AI tools altogether. For instance, U.S. Fair Lending regulations require financial institutions to explain credit decisions to potential customers, which limits the extent to which lenders can use deep learning algorithms.

In contrast, the European Union’s General Data Protection Regulation (GDPR) already limits the training and functionality of many consumer-facing AI applications by imposing strict limits on how enterprises can use consumer data. However, policymakers in the U.S. have yet to issue AI legislation, though this may change soon.

The White House Office of Science and Technology Policy (OSTP) published a “Blueprint for an AI Bill of Rights” in October 2022, guiding businesses on implementing ethical AI systems. Additionally, the U.S. Chamber of Commerce called for AI regulations in a report released in March 2023.

Crafting laws to regulate AI is challenging, partly because AI comprises various technologies companies use for different ends. Moreover, regulations can come at the cost of AI progress and development, and the rapid evolution of AI technologies is another obstacle to forming meaningful regulation of AI. Challenges presented by AI’s lack of transparency also make it difficult to see how algorithms reach their results. Novel applications such as ChatGPT and Dall-E can make existing laws instantly obsolete, and regulations that governments do manage to craft to regulate AI don’t stop criminals from using the technology with malicious intent.

In recent interviews, Elon Musk talked about how artificial intelligence (AI) can change the world. However, he is also worried about the unregulated development of AI, saying that it is stressing him out. In one interview, he even warned about the dangers of unregulated AI development. Musk believes that if AI is not regulated correctly, it could lead to catastrophic consequences, such as using AI for biowarfare. He even mentioned that some medicines had been created with the help of AI, which can be potentially dangerous if used for malicious purposes.

A Brief History of AI: From Ancient Myths to Modern Computers

The idea of inanimate objects possessing intelligence dates back to ancient times. In Greek mythology, the god Hephaestus was portrayed as creating robot-like servants made of gold. Similarly, ancient Egyptian engineers built statues of gods animated by priests. Over the centuries, philosophers and thinkers such as Aristotle, Ramon Llull, René Descartes, and Thomas Bayes used the tools and logic of their times to describe human thought processes as symbols, paving the way for AI concepts such as general knowledge representation.

In the late 19th and early 20th centuries, foundational work was laid for developing the modern computer. In 1836, mathematician Charles Babbage of Cambridge University and Ada Lovelace, the Countess of Lovelace, invented the first design for the programmable machine.

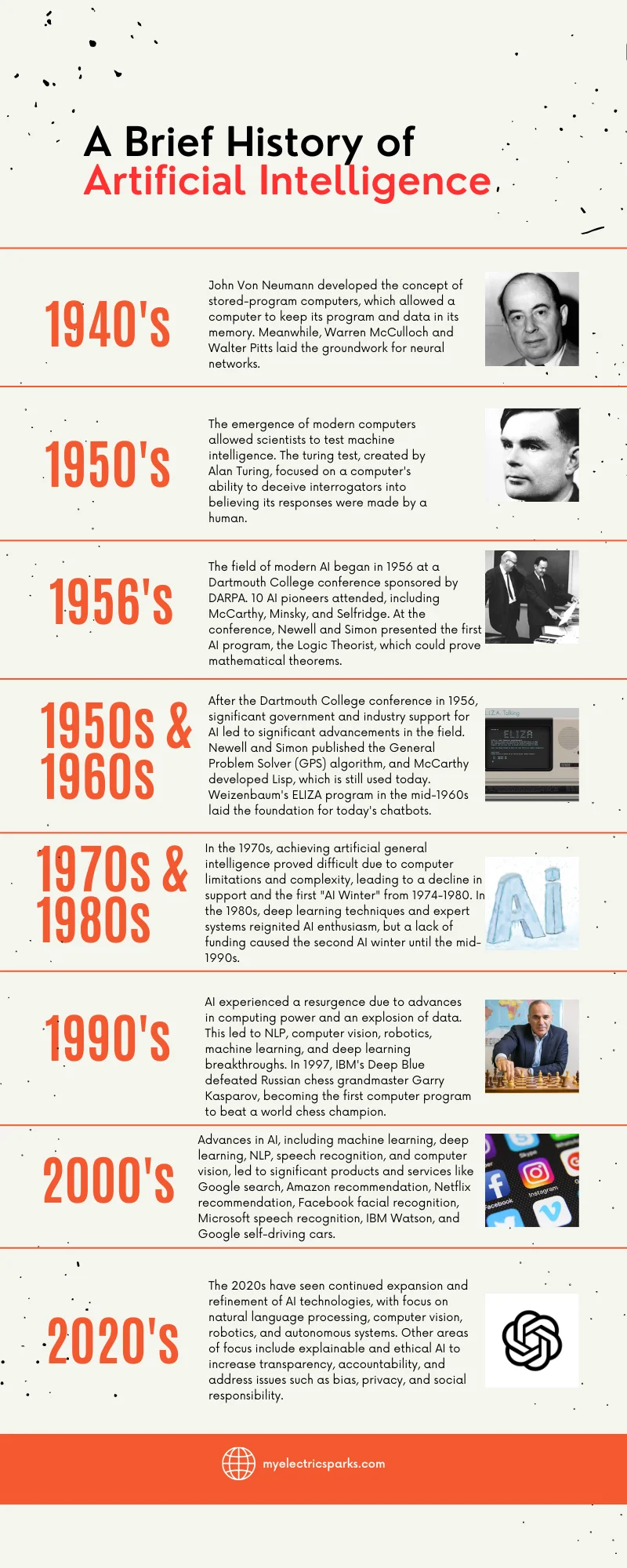

1940s. In the 1940s, John Von Neumann, a Princeton mathematician, devised the idea for stored-program computers. This was the concept that a computer could keep its program and data in its memory. Warren McCulloch and Walter Pitts also created the foundation for neural networks during this decade.

1950s. The 1950s saw modern computers’ emergence, allowing scientists to test their ideas about machine intelligence. One of the ways to determine if a computer had intelligence was through the Turing test, created by Alan Turing, a British mathematician, and code-breaker from World War II. The Turing test focused on a computer’s ability to deceive interrogators into believing its responses were made by a human.

1956. The modern field of artificial intelligence is said to have begun in 1956 during a summer conference at Dartmouth College. This conference, sponsored by the Defense Advanced Research Projects Agency (DARPA), was attended by 10 leading figures in AI, including pioneers like Marvin Minsky, Oliver Selfridge, and John McCarthy, credited with coining the term “artificial intelligence.” At the conference, Allen Newell, a computer scientist, and Herbert A. Simon, an economist, political scientist, and cognitive psychologist, presented their groundbreaking Logic Theorist program. This program could prove certain mathematical theorems and is called the first AI program.

1950s and 1960s. Following the Dartmouth College conference, leaders in the field of AI predicted that a man-made intelligence equivalent to the human brain was just around the corner. This led to significant government and industry support for nearly 20 years, resulting in significant advances in AI. For example, Newell and Simon published the General Problem Solver (GPS) algorithm in the late 1950s, which laid the foundation for developing more sophisticated cognitive architectures. McCarthy also developed Lisp, a language for AI programming still used today. In the mid-1960s, MIT Professor Joseph Weizenbaum created ELIZA, an early NLP program that laid the foundation for today’s chatbots.

1970s and 1980s. However, the achievement of artificial general intelligence proved to be more elusive than predicted. This was due to limitations in computer processing and memory, as well as the complexity of the problem. As a result, government and corporate support for AI research declined, leading to a fallow period from 1974 to 1980 known as the first “AI Winter.” In the 1980s, research on deep learning techniques and adopting Edward Feigenbaum’s expert systems sparked a new wave of AI enthusiasm. This was followed by another collapse in government funding and industry support, resulting in the second AI winter, which lasted until the mid-1990s.

1990s. However, the late 1990s saw an AI renaissance thanks to increases in computational power and an explosion of data. This propelled NLP, computer vision, robotics, machine learning, and deep learning breakthroughs. In 1997, IBM’s Deep Blue defeated Russian chess grandmaster Garry Kasparov, becoming the first computer program to beat a world chess champion.

2000s. Further advances in machine learning, deep learning, NLP, speech recognition, and computer vision led to the development of products and services that have significantly impacted our lives today. These include Google’s search engine, Amazon’s recommendation engine, Netflix’s recommendation system for movies, Facebook’s facial recognition system, and Microsoft’s speech recognition system for transcribing speech into text. Additionally, IBM launched Watson, and Google started its self-driving.

Overall, the 2020s have seen a continued expansion and refinement of AI technologies, including advances in natural language processing, computer vision, robotics, and autonomous systems. In addition to generative AI, other focus areas include explainable AI, which seeks to increase transparency and accountability in AI systems, and ethical AI, which addresses bias, privacy, and social responsibility issues.

As AI becomes increasingly integrated into our daily lives, it is important to ensure that it is developed and deployed to benefit society. This requires collaboration and cooperation between researchers, developers, policymakers, and other stakeholders to ensure that AI is used ethically, responsibly, and in the service of human well-being.

What are AI tools and services?

The world of AI is constantly evolving, with new tools and services emerging at an exceptional rate. This evolution can be traced back to the 2012 AlexNet neural network, which paved the way for high-performance AI built on GPUs and large data sets. AI models have become more scalable and robust, able to train neural networks on massive amounts of data across multiple GPU cores in parallel.

A close collaboration between industry giants like Google, Microsoft, and OpenAI and hardware innovators like Nvidia has led to AI performance and scalability breakthroughs in recent years. These advancements have allowed more extensive and complex AI models to be run on connected GPUs, leading to game-changing AI tools and service improvements.

This collaboration has been critical to the success of ChatGPT, as well as many other cutting-edge AI services. Keep reading for a rundown of the essential innovations in AI tools and services.

Transformers. Google has been at the forefront of developing a more efficient process for AI training by using a large cluster of commodity PCs with GPUs, leading to the discovery of transformers that automate many aspects of training AI on unlabeled data.

Hardware optimization. Hardware vendors like Nvidia have also optimized the microcode for running across multiple GPU cores in parallel for popular algorithms, resulting in a million-fold improvement in AI performance. Nvidia collaborates with cloud center providers to make this capability more accessible through IaaS, SaaS, and PaaS models.

Generative pre-trained transformers. The AI stack has rapidly evolved, with vendors like OpenAI, Nvidia, Microsoft, and Google providing generative pre-trained transformers (GPTs) that can be fine-tuned for a specific task at a reduced cost and time. This results in faster time to market and reduces risk.

AI cloud services. Data engineering and data science tasks required to integrate AI capabilities into new or existing apps can be significant roadblocks for enterprises. All major cloud providers offer branded AI as service offerings to streamline data prep, model development, and application deployment.

Cutting-edge AI models as a service. Leading AI model developers offer cutting-edge AI models on top of cloud services. OpenAI has language models optimized for chat, NLP, image generation, and code generation available through Azure. At the same time, Nvidia offers foundational models optimized for text, images, and medical data across all cloud providers. Many other players also offer models customized for various industries and use cases.

There is also some standard tools of AI.

Here is a table summarizing some of the standard tools used in artificial intelligence (AI) development:

| Tool Category | Examples |

|---|---|

| Programming languages | Python, R, Java, C++ |

| Machine learning frameworks | TensorFlow, PyTorch, Keras |

| Natural language processing (NLP) tools | NLTK, Spacy, GPT |

| Robotics development tools | ROS (Robot Operating System) |

| Data visualization tools | Tableau, Power BI |

Note that this is not an exhaustive list, and many other tools and technologies are used in AI development.

FAQs about Artificial Intelligence:

What is artificial intelligence, in simple words?

What are the 4 types of AI?

What is artificial intelligence in one example?

What are the risks of artificial intelligence (AI)?

Job displacement as AI systems automate specific tasks previously performed by humans.

Biases in AI algorithms that reflect or amplify existing social and economic inequalities.

Privacy and security risks related to the collection and use of personal data by AI systems.

Ethical concerns related to the development and use of autonomous weapons and the potential for super AI to pose an existential risk to humanity.