Meta, the parent company of Facebook, just announced an incredible breakthrough in artificial intelligence! They’ve developed a cutting-edge model called “segment anything” to locate objects accurately within images and videos. This is a significant step forward in image recognition technology, and it has enormous potential for advancing research in segmentation. With “segment anything,” Meta aims to provide a more comprehensive understanding of visual content, which is truly unique!

On April 5th, Meta released an AI model to identify specific objects within an image. In addition, they also shared a dataset of image annotations that they claim is the largest of its kind.

The company’s research division announced through a blog post that they had developed a new Segment Anything Model or SAM model. According to the post, this model can recognize objects in images and videos even if it hasn’t encountered them before during its training.

Segmentation is identifying the pixels in an image that belong to a particular object. Meta says their technology aims to make this process more accessible and widespread. They want to “democratize” segmentation for various applications, such as analyzing scientific images and editing photos.

Developing a precise segmentation model for specific tasks usually requires specialized work from technical experts with access to AI-enabled infrastructure and massive datasets. However, Meta has introduced a new project that offers a general Segment Anything Model (SAM) and a Segment Anything 1-Billion mask dataset (SA-1B), which can help simplify the process. These resources are now available to users who need them.

The Segment Anything Model (SAM) has a broad range of applications, including the ability to work with underwater photography. It can potentially improve creative endeavors, such as video editing and extracting specific parts from images to make collages. Moreover, SAM can enhance scientific investigations of natural phenomena on Earth or in space.

According to Meta, they aimed to create a foundation model for image segmentation that is versatile and can adapt to specific tasks. They compared this approach to using prompts in models for natural language processing. In other words, the model they developed is a general one that can be fine-tuned to perform specific tasks.

Training an image segmentation model with videos, images, and text is not as straightforward. This is because the segmentation data required for training is not readily available online or anywhere else. Hence, with the Segment Anything project, Meta aimed to create a segmentation dataset of unmatched size while also developing a general segmentation model that can be easily fine-tuned for specific tasks.

Including generative AI “creative aids” into Meta’s applications has been highlighted as a priority for Chief Executive Mark Zuckerberg this year. Meta already uses a technology similar to SAM for tasks like tagging photos, moderating prohibited content, and recommending posts to Facebook and Instagram users.

However, the release of SAM will make this type of technology more widely available to users. The model and dataset can be downloaded under a non-commercial license, and users must agree to use the accompanying prototype for research purposes only when uploading their images.

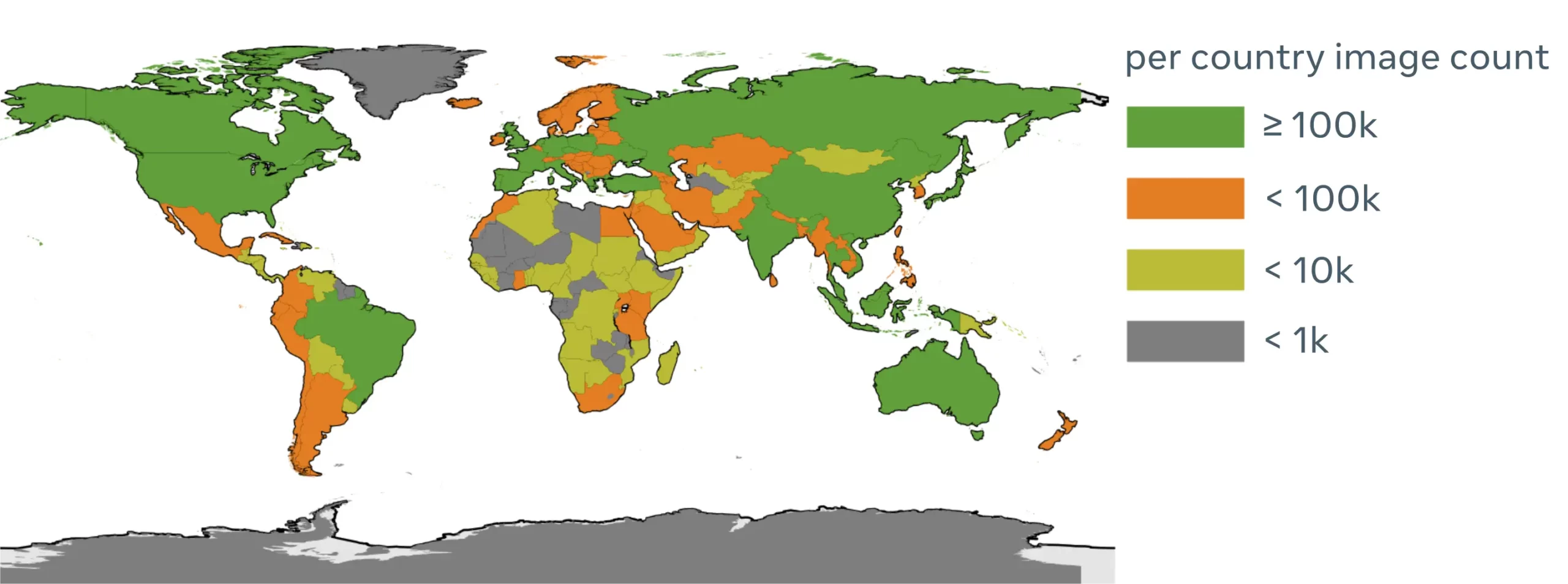

The following table provides information about a dataset made available for research purposes.

The dataset includes images and mask annotations, with the primary data type being images and the intended use cases for training and testing generic object segmentation models.

| Column 1 | Column 2 |

|---|---|

| Key Application | Computer Vision, Segmentation |

| Intended Use Cases | Research purposes only |

| Primary Data Type | Images, Mask annotations |

| Data Function | Training, testing |

| Dataset Characteristics | ✔ Total number of images: 11M ✔ Total number of masks: 1.1B ✔ Average masks per image: 100 ✔ Average image resolution: 1500×2250 pixels No class labels for images or mask annotations |

| Labels | Class agnostic mask annotations |

| Nature Of Content | Images licensed from a large photo company, covering various subjects |

| Privacy PII | Faces and license plates de-identified |

| License | Images licensed from a large photo company covering various subjects |

| Access Cost | Open access |

| Data Collection | Images licensed from a photo company, masks generated by the Segment Anything Model (SAM) |

| Sampling Methods | Unsampled |

| Labeling Methods | Automatically generated masks (more details in the Segment Anything paper) |

| Label types | Masks are provided in the COCO run-length encoding (RLE) annotation format |

| Labeling procedure | Automatic – generated by a model trained on annotations from expert human annotators |

The final dataset contains over 1.1 billion segmentation masks from around 11 million licensed images. These images have been selected in a privacy-preserving manner, ensuring that sensitive information such as faces and license plates are de-identified.